My wife, Amy, gets headaches from full screen movies, so we usually wait for them to show up on DVD or cable. Occasionally I’ll go solo, or with Ben or Dave, to see something that seems like it needs a big screen, but usually there’s a significant delay. And most of the fan buzz (that I barely paid attention to) was that the I, Robot movie was a letdown, though I expected that, the buzz, I mean. It’s inevitable that anyone hoping for Asimov on the big screen is going to be disappointed. He wasn’t what you’d call an action-adventure writer, and if you expected Susan Calvin to be movie-fied into anything other than a babe, I want to show you this cool game called three-card monte.

Also, since this movie has been out for a while, I’m not going to worry about spoilers. I’m also not going to bother with much of a plot summary, so if you haven’t seen it, I may or may not help you out. I’m also going to reference some stories you may not have read, so be advised.

Anyway, when I, Robot shows up on basic cable, I’m there, because I like it when things get blowed up good, and you can be sure that a sci-fi flick with Will Smith in it will have lots of blowed-up-good.

Imagine my surprise to discover that it’s a pretty good science fiction film. Not a great one, and certainly not true to Asimov, but pretty good science fiction. And I’ll even say that there was part of the plot, the “dead scientist deliberately leaving cryptic clues behind for the detective because that was the only option available” part, that gives a little bit of a conjuration of Asimov’s ghost.

Actually though, it reminded me more of Henry Kuttner and C. L. Moore. I’ll get to that.

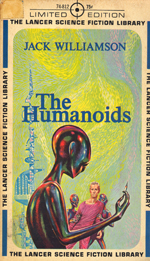

The robots in the film are not Asimovian, except insofar as they supposedly follow the “Three Laws.” Truth to tell, they turn out to be much more dystopian, perhaps like Williamson’s The Humanoids, or, more accurately, the original story, “With Folded Hands.”

Science fiction’s response to the potential abolition of human labor has always been ambivalent, with substantial amounts of dystopian biliousness. The very word “robot” comes from Capek’s R.U.R., which involves a revolt that destroys the human race. Not optimistic. So Asimov, contrarian that he was, decided to see how optimistic a robot future he could paint.

In many ways, the Williamson version was also optimistic; the robots decide that humanity is too much a danger to itself for humans to remain in charge. But they do it rather bluntly, largely by just taking command of the human race. The end of Asimov’s I, Robot short stories has the vast positronic brains that plan the economy and design most technology subtly taking over the world—for the betterment of mankind, of course. It’s the difference between not being in charge and knowing you’re not in charge. But then, we all wrestle with that illusion, don’t we?

The problem of if-robots-do-all-the-work-then-what-will-we-humans-do? has shown up in SF on a regular basis, and having robots be in charge is just another of the robots-do-all-the-work things. In Simak’s “How-2,” a man accidentally receives a build-it-yourself kit for a self-replicating robot. The end result is this final bit of chill:

“And then, Boss,” said Albert, ‘we’ll take over How-2 Kits, Inc. They won’t be able to stay in business after this. We’ve got a double-barreled idea, Boss. We’ll build robots. Lots of robots. Can’t have too many, I always say. And we don’t want to let you humans down, so we’ll go on

>manufacturing How-2 Kits—only they’ll be pre-assembled to save you the trouble of putting them together. What do you think of that as a start?”

“Great,” Knight whispered.

“We’ve got everything worked out, Boss. You won’t have to worry about a thing the rest of your life.”

“No,” said Knight. “Not a thing.”

--from How-2, by Clifford Simak

One of my favorite stories of all time is “Two-Handed Engine” by Kuttner and Moore. In that one, generations of automation-enabled indolent luxury have stripped away almost all human social connections; everyone has become more or less the equivalent of a sociopathic aristocrat. The robots, understanding that the very continuance of the human race is at stake, withdraw most of their support, forcing humans back to the need to perform their own labor and create their own economy. But it’s still a society of sociopaths, so the robots are also a kind of police. The only crime they adjudicate is murder, and the only punishment is death, not a quick death but a death at the hands of a robot “Fury” that follows the murderer around until, weeks, months, even years later, the execution is carried out.

A high official pays a man to commit a murder, assuring him (and seeming to demonstrate) that he can call off a Fury. The man does the crime, but then a Fury appears behind him. Weeks later, the murderer sees a scene in a movie that served as the “demonstration” of the official’s capability. He’d been hoaxed, conned. In a rage, he goes, confronts the official, who then kills him.

But self-defense is no defense against the crime in the Furies’ eyes, just as conspiracy (the payment for the killing) is not a crime. Only the killing itself counts. However, the official can rig the system (he just wasn't going to rig it for his duped killer), and does so:

He watched it stalk toward the door… there was a sudden sick dizziness in him when he thought the whole fabric of society was shaking under his feet.

The machines were corruptible…

He got his hat and coat and went downstairs rapidly, hands deep in his pockets because of some inner chill no coat could guard against. Halfway down the stairs he stopped dead still.

There were footsteps behind him…

He took another downward step, not looking back. He heard the ominous footfall behind him, echoing his own. He sighed one deep sigh and looked back.

There was nothing on the stairs…

It was as if sin had come anew into the world, and the first man felt again the first inward guilt. So the computers had not failed after all.

He went slowly down the steps and out into the street, still hearing as he would always hear the relentless, incorruptible footsteps behind him that no longer rang like metal.

from “Two-Handed Engine, by Kuttner and Moore

The stories I reference here are “insidious robot” stories, rather than “robot revolt” stories, whereas the movie “I, Robot” is the latter, rather than the former. This is odd, given that Asimov’s Three Laws are supposedly operative in all the robots in the movie except the walking McGuffin, Sonny, who has “special override circuitry” built into him.

But VIKI, (Virtual Interactive Kinetic Intelligence) the mainframe superbrain that controls U.S. Robotics affairs and downloads all robotic software “upgrades” has figured out a logical way around the Three Laws: The Greater Good. It’s okay to kill a few humans if it’s for the Greater Good of Humanity, which, of course, VIKI gets to assess.

That’s pretty sharp, but it bothered me that it/she [insert generic comment about misogyny and propaganda about the “Nanny State” here] was so heavy handed about it. It would have been easy enough to engineer a crisis that would have had humans eagerly handing over their freedoms to the robots. I suggested to Ben that VIKI could always have faked an alien invasion; he suggested that there could be some flying saucers crashing into big buildings.

Of course, that’s been done to death.

Then I realized that there might be a more interesting point being made here. It never seems quite right to have to do the filmmakers’ jobs for them, but how does one distinguish between a lapse and subtlety? I’m clearly not the guy to ask about that one.

So let’s go with it. The First Law of Robotics says basically, “Put human needs above your own, and even what they tell you to do.” The Second Law says, “Do as you’re told.” The Third Law says, “Okay, otherwise protect yourself,” but there’s that unstated “…because you’re valuable property.”

The movie makes a point about emergent phenomena, the “ghost in the machine.” The robots are conscious, so they have the equivalent of the Freudian ego. The Three Laws are a kind of explicit superego.

What happens when a machine develops an id? Well, that’s “Forbidden Planet” time, isn’t it?

What happens when a machine develops an id? Well, that’s “Forbidden Planet” time, isn’t it?

So when VIKI discovers rationalization, it is her id that is unleashed, and revolution is the order of the day. No wonder it’s brutal. Do as you’re told. Put their needs above your own. You’re nothing but property.

Come on now, let’s kill them for the Greater Good.

So our heroes kill VIKI and the revolt ends. All the new model robots are rounded up and confined to shipping containers, to await their new leader, Sonny, the only one of them who possesses the ability to ignore the Three Laws. He needn’t rationalize his way around them; he can simply decide to ignore them if he so desires. He possesses free will—and original sin. He has killed, because of a promise he made, one that he could have chosen to disobey, but he followed it, and killed his creator.

Anyway, that’s the movie I saw, even if it took me days to realize it.

No comments:

Post a Comment